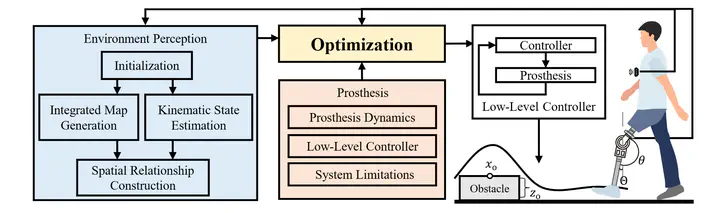

Illustration of the framework for powered knee prostheses to achieve obstacle avoidance

Illustration of the framework for powered knee prostheses to achieve obstacle avoidance

Abstract

This paper presents a novel framework for powered knee prostheses to achieve obstacle avoidance by directly integrating environmental information. Unlike conventional approaches that rely on human motion patterns to infer obstacle information, our framework employs a two-stage strategy: a front-end perception system that measures obstacle dimensions and spatial relationships with the prosthesis wearer, and a back-end optimization module that generates appropriate avoidance trajectories. This direct perception approach addresses limitations of current methods, particularly in handling obstacles of diverse sizes, where traditional human-motion-based inference or simple distance measurements may prove insufficient. The framework’s effectiveness is preliminarily verified through simulation studies across varying obstacle dimensions and positions. Our approach demonstrates the potential for enhanced prosthetic control that directly incorporates environmental information, potentially leading to more robust and adaptable obstacle avoidance strategies for powered knee prostheses.